How does scoring work?

Scoring in Silktide is quite complex and does change over time. This guide will explain how it works.

Scores

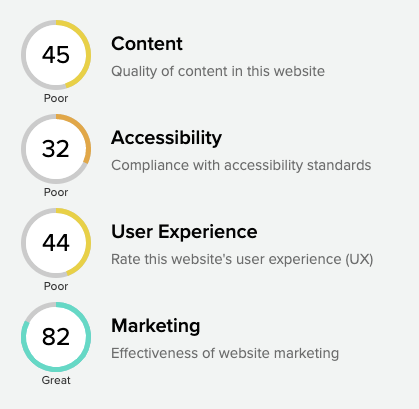

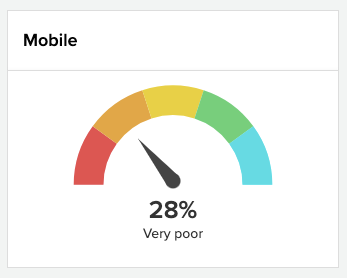

Scores range from 0 – 100, and are shown for “categories” in your website, e.g. Content, Accessibility, and Marketing.

Scores can appear as circles:

Or as gauges:

Depending on the modules you have purchased you may not see different scores. What scores you see also depends on your user’s permissions, and the configuration of your account.

Calculating scores

Scores are calculated from a weighted mix of the progress of hundreds of Actions (see below). Each score refers to a different selection of Actions, with different weights for each.

Some Actions are entirely manual – i.e. they cover tasks that must be performed manually. These manual Actions do not impact your score.

Silktide applies penalties to scores when specific negative criteria are met. For example, a webpage that is made with Adobe Flash will score very poorly overall, even if it meets the majority of other criteria.

Penalties more accurately reflects the experience of end users: good points add value, but a few especially bad points can erode all of that value (e.g. a fast and well written web page that contains Flash animation).

Scores are then adjusted to fit a Normal distribution, i.e. a bell curve with a mean score of 50. These calibrations are updated once a year.

Calculating Action progress

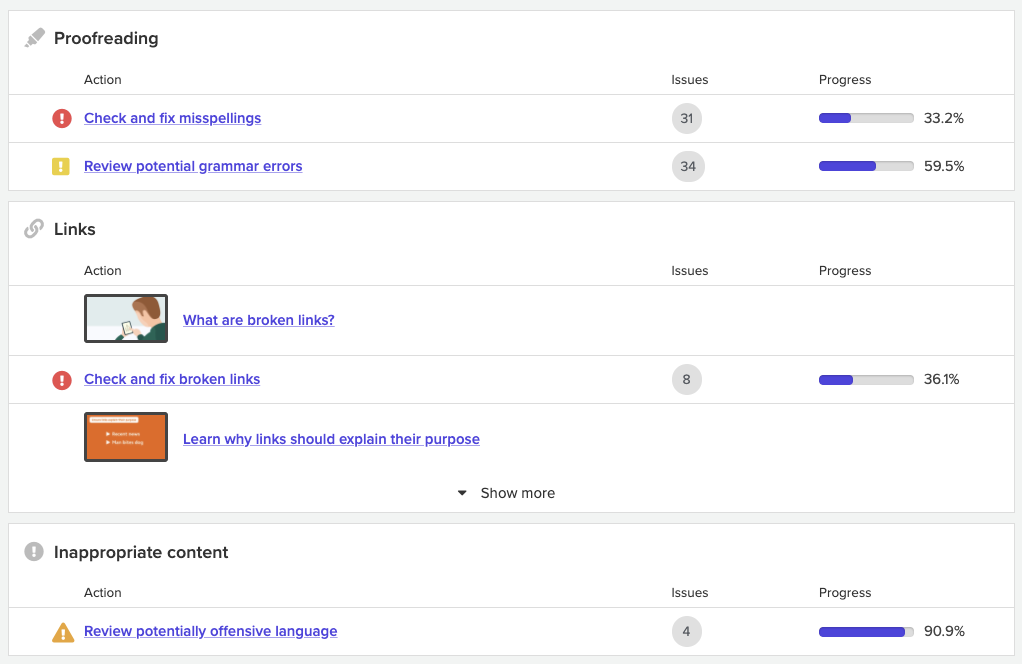

Each Action is scored and given a “progress” measure, visible to the right-hand-side of each Actions screen.

To calculate their progress, each Action:

- Estimates how much time is required to complete the Action. For example, how long should it take to fix all known spelling errors.

- Divide this time by the size of a relevant component of the website. E.g. for spelling, this might be the number of words in the website.

- Calculate progress from the ratio of “time to size”. The ratio is multiplied by a constant to calculate the progress for an individual Action.

This is a simplification of what can be a very complex process. To give some examples:

- If one issue is repeated consistently across a template, it might be classed as easier to fix than many different issues.

- Some contexts – such as PDFs – may be considered harder to fix than others – such as CMS webpages.

- Some actions consider multiple timing factors and blend them, e.g. the time to find an issue, the time to publish a change, etc.

In all cases, the aim is to give a representative indication of how far along a given Action is. We have found this approach is far more instructive than simply measuring the percentage of problems over pages, or other simplistic measures.

WCAG level scoring

When testing Accessibility, Silktide applies the following weighting to different WCAG levels:

- WCAG A = 45%

- WCAG AA = 40%

- WCAG AAA = 15%

Note that each WCAG level is an extension of the level below it, i.e. AAA also includes everything from AA and A. In practice, this means that checks specific to AAA have very minimal impact (~5%) on the overall score.

Rounding of scores

Scores are rounded to the nearest whole number, unless doing so would round a score up to 100.

This means if a website scores (say) 99.5 for Accessibility, Silktide will display a score of 99 / 100 instead of 100 / 100. This is to avoid giving the incorrect impression that a score is ‘perfect’, which it is merely very close.

Previous scoring versions

Prior to January 2019, Silktide’s scoring was different. Some customers may still have our old scoring methodology enabled:

- Notices could count towards your summary score, but only very slightly.

- Penalties did not exist.

- Scores were not calibrated to meet a Normal distribution.

- The weighting of checks was different.

See more

- How does Silktide detect cookies?

- How does Silktide choose which pages to test?

- What browsers does Silktide support?

- Why doesn't Silktide offer an accessibility plugin or overlay?

- What languages does Silktide support?

- What IP address and user agent does Silktide use?

- How does Silktide count pages?

- How does Silktide handle frames and iframes?

- How does Sitebeam compare with Silktide?

- How does Silktide see websites?

- How long does a website take to test?

- How does Silktide security work?